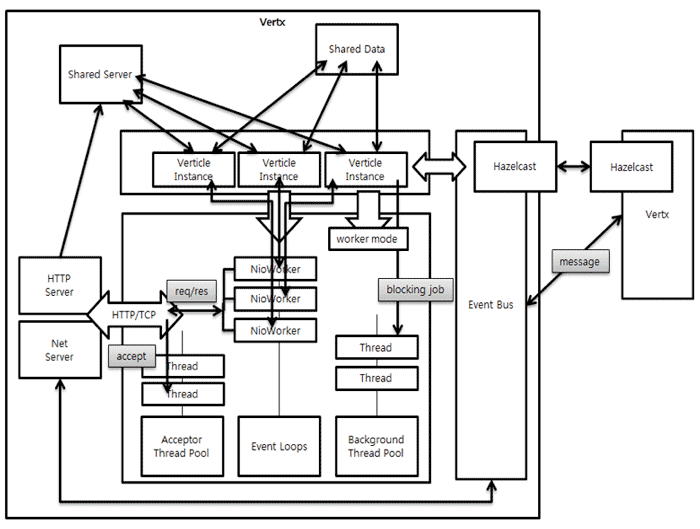

Vertx Design

支持多种语言:只要能运行在JVM上的语言,基本都支持。

简单的并发模型:就像写单线程代码一样简单,多线程并发由Vertx控制。

支持Event Bus:在同一个vertx集群,各个verticle 实例间可以通过event bus通信。同时也支持跨进程的TCP Event Bus (tcp-eventbus-bridge)

Vertx与Netty的关系:Vertx使用Netty处理所有的IO。

Vertx 术语

Verticle

Verticles are chunks of code that get deployed and run by Vert.x. A Vert.x instance maintains N event loop threads (where N by default is core*2) by default. Verticles can be written in any of the languages that Vert.x supports and a single application can include verticles written in multiple languages.

Vert.x Instance

A Verticle is executed within a Vert.x instance and the Vert.x instance is executed in its JVM instance. So there will be a lot of Verticles which are simultaneously executed in a single Vert.x instance. Each Verticle can have its own unique class loader. In this manner, direct interactions between Verticles, made through static members and global variables, can be prevented. A lot of Verticles can be simultaneously executed in several hosts on the network and the Vert.x instances can be clustered through Event Bus.

Concurrency

The Verticle instance guarantees it is always executed on an identical thread. As all codes can be developed as a single thread operation type, developers who use environment where Vert.x can be easily developed. In addition, race condition or deadlock can be prevented.

Event-based Programming Model

Like the Node.js framework, Vert.x provides an event-based programming model. When programming a server by using Vert.x, most codes for development are related to event handlers. For example, an event handler should be set to receive data from a TCP socket or an event handler, which will be called when data is received, should be created.

Event Loops

Vert.x instance internally manages the thread pool. Vert.x matches the number of thread pools to the number of CPU cores as closely as possible.

Each thread executes Event Loop. Event Loop verifies the events as rounding the loop. For example, verifying whether there is data to read in the socket or on which timer an event has occurred. If there is an event to process on the loop, Vert.x calls the corresponding handler (of course, additional work is necessary if the handler-processing period is too long or there is a blocking I/O).

Message Passing

Verticles use Event Bus for communication. If a Verticle is assumed as an actor, Message Passing is similar to an actor model, which was famous in Erlang programming languages. The Vert.x server instance has a lot of Verticle instances and allows message passing among the instances. Therefore, the system can be extended according to the usable cores without executing the Verticle code through multi-thread.

Shared data

Message passing is very useful. However, it is not always the best approach in all types of application concurrency situations. Cache is one of the most popular examples. If only one vertical has a certain cache, it is very inefficient. If other Verticles need the cache, each Verticle should manage the same cache data.

Therefore, Vert.x provides a method for global access. It is the Shared Map. Verticles share immutable data only.

Vert.x Architecture

Verticle 是执行单元,在同一个Vertx实例中可以同时执行多个Verticle。Verticle在event-loop线程上执行,多个Vert.x instances可以在多个host上执行,各个Verticles 通过event bus通信。

Vert.x Thread Pool

Vert.x has three types of thread pools:

- Acceptor: A thread to accept a socket. One thread is created for one port.

- Event Loops: (same with Run Loop) equals the number of cores. When an event occurs, it executes a corresponding handler. When execution is performed, it repeats reading another event.

- Background: Used when Event Loop executes a handler and an additional thread is required. Users can specify the number of threads in vertx.backgroundPoolSize, an environmental variable. The default is 20. Using too many threads causes an increase in context switching costs, so be cautious.

Event Loops can be described as follows in a detailed way. Event Loops use Netty NioWorkder as it is. All handlers specified by verticles run on Event Loops. Each verticle instance has its specified NioWorker. As such, it is guaranteed that a verticle instance is always executed on an identical thread. Therefore, verticles can be written in a thread-safe manner.

Why is Hazelcast Used?

Vert.x uses Hazelcast, an In-Memory Data Grid (IMDG). Hazelcast API is not directly revealed to users but is used in Vert.x. When Vert.x is started, Hazelcast is started as an embedded element.

Hazelcast is a type of distributed storage. When storage is embedded and used in a server framework, we can obtain expected effects from a distributed environment.

Hazelcast allows a message queue use without additional costs or investments (without server costs or monitoring of message queue instances). As mentioned before, Hazelcast is a distributed storage. It can duplicate a storage for reliability. By using this distributed storage as a queue, the server application implemented by using Vert.x becomes a message processing server application and a distributed queue.

These benefits make Vert.x a strong framework in a distributed environment.

Reference:

https://www.cubrid.org/blog/inside-vertx-comparison-with-nodejs/

https://www.cubrid.org/blog/understanding-vertx-architecture-part-2

https://medium.com/@levon_t/java-vert-x-starter-guide-part-1-30cb050d68aa

https://medium.com/@levon_t/java-vert-x-starter-guide-part-2-worker-verticles-c49866df44ab