最近我朋友疯狂迷恋韩国的偶像团体防弹少年团,于是拜托我帮忙写一段程序实时检测韩国新闻网站instiz旗下两个板块pt和clip,当出现自家idol的新闻时,程序能自动发微博通知她。我觉得这个功能还是蛮有意思的,程序实现起来并不复杂,而且妹子的请求不好意思拒绝,所以就答应她了。说干就干,程序实现如下。

模拟登录微博

发微博的功能如果借助微博的api其实很简单,然而当我注册站内应用获得APPKEY和ACCESS_TOKEN时,审核了2天竟然被拒了(当然也有可能是自己填的太随意哈)。不过懒得等的我干脆自己模拟浏览器登录发微博。

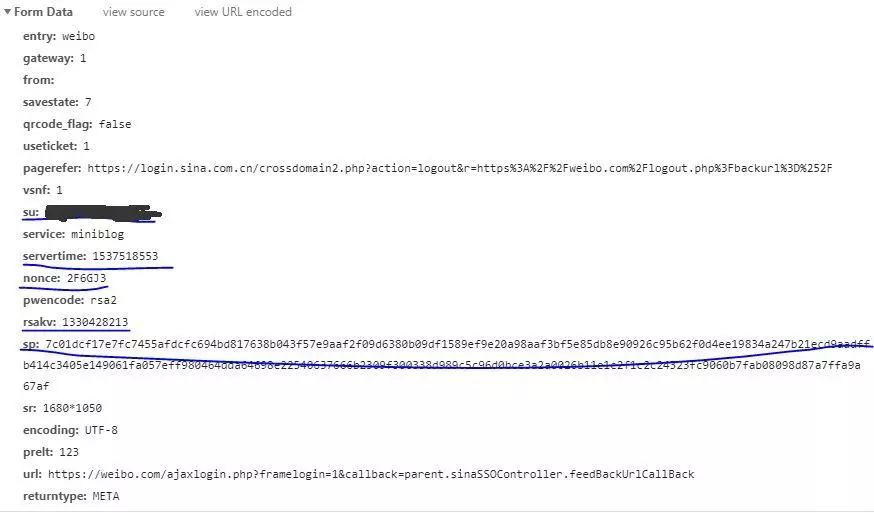

首先我们需要分析模拟登陆需要提交的表单,打开chrome分析登录时https://login.sina.com.cn/sso/login.php?client=ssologin.js(v1.4.19) 需要提交的表单,可以发现有以下信息需要我们注意:

其中servertime,nonce,rsakv应该来自之前get到的数据,翻之前的url请求,注意到有一个prelogin的url值得我们注意,观察返回来的数据:

{"retcode":0,

"servertime":1537519779,

"pcid":"gz-7b560c8c199aa33a6b201149095489a4d147","nonce":"H6ITP0","pubkey":"EB2A38568661887FA180BDDB5CABD5F21C7BFD59C090CB2D245A87AC253062882729293E5506350508E7F9AA3BB77F4333231490F915F6D63C55FE2F08A49B353F444AD3993CACC02DB784ABBB8E42A9B1BBFFFB38BE18D78E87A0E41B9B8F73A928EE0CCEE1F6739884B9777E4FE9E88A1BBE495927AC4A799B3181D6442443",

"rsakv":"1330428213",

"is_openlock":0,

"lm":1,

"smsurl":"https:\/\/login.sina.com.cn\/sso\/msglogin?entry=weibo&mobile=18800190793&s=f0cd668a3b51745b707c94f41a090c98",

"showpin":0,

"exectime":110}

里面也确实有我们需要的数据,那么另外两个su以及sp应该就是加密之后的username和password了,然而网站如何加密的呢?

仔细观察的话会有一个ssologin.js的文件在prelogin和login中都有出现,猜测加密应该是在这里面完成的,这个文件很大,但我们只需要搜我们自己想要的,果然找到了如下代码:

request.su=sinaSSOEncoder.base64.encode(urlencode(username))

RSAKey.setPublic(me.rsaPubkey,"10001");

password=RSAKey.encrypt([me.servertime,me.nonce].join("\t")+"\n"+password)

OK,将这段js代码翻译成python代码就可以了,完整的代码如下所示:

importrequests

importbase64

importre

importrsa

importjson

importbinascii

importtime

classweibo(object):

def__init__(self, username, password):

self.username = username

self.password = password

defencrypted_username(self):

# 对用户名加密,返回su

returnbase64.b64encode(bytes(self.username, encoding="utf-8")).decode("utf-8")

defencrypted_password(self):

# 对密码加密,返回sp

data=self.get_prelogin_data()

rsa_e=65537

password_str=str(data["servertime"])+"\t"+str(data["nonce"])+"\n"+self.password

key=rsa.PublicKey(int(str(data["pubkey"]),16), rsa_e)

password_encrypt=rsa.encrypt(password_str.encode("utf-8"), key)

returnbinascii.b2a_hex(password_encrypt).decode("utf-8")

defget_prelogin_data(self):

# 以json格式返回prelogin的数据

url ='http://login.sina.com.cn/sso/prelogin.php?entry=weibo&callback=sinaSSOController.preloginCallBack&su=&'+ self.encrypted_username() +'&rsakt=mod&checkpin=1&client=ssologin.js(v1.4.18)'

withrequests.Session()assess:

r=sess.get(url, timeout=2)

pattern = re.compile(r"\((.*)\)")

data = pattern.search(r.text).group(1)

json_data=json.loads(data)

returnjson_data

deflogin_and_send(self, text):

prelogin_data=self.get_prelogin_data()

data={

"entry":"weibo",

"gateway":"1",

"from":"",

"savestate":"7",

"qrcode_flase":"false",

"useticket":"1",

"pagerefer":r"http://passport.weibo.com/visitor/visitor?entry=miniblog&a=enter&url=http%3A%2F%2Fweibo.com%2F&domain=.weibo.com&ua=php-sso_sdk_client-0.6.14",

"vsnf":"1",

"su":self.encrypted_username(),

"service":"miniblog",

"servertime":prelogin_data['servertime'],

"nonce":prelogin_data['nonce'],

"pwencode":"rsa2",

"rsakv":prelogin_data['rsakv'],

"sp":self.encrypted_password(),

"sr":"1680*1050",

"encoding":"UTF-8",

"prelt":"122",

"url":"https://weibo.com/ajaxlogin.php?framelogin=1&callback=parent.sinaSSOController.feedBackUrlCallBack",

"returntype":"META"

}

url ='http://login.sina.com.cn/sso/login.php?client=ssologin.js(v1.4.18)'

headers = {

"User-Agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.87 Safari/537.36"

}

#设置发微博时需要提交的表单

weibo_data={

"location":"v6_content_home",

"appkey":"",

"style_type":"1",

"pic_id":"",

"text": text,

"pdetail":"",

"rank":"1",#设置仅自己可见,如果对所有人可见改为0

"rankid":"",

"module":"stissue",

"pub_type":"dialog",

"_t":"0",

}

weibo_headers = {

"User-Agent":"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.87 Safari/537.36",

"referer":""

}

withrequests.Session()assess:

r=sess.post(url, headers=headers, data=data, timeout=2, allow_redirects=True)

pattern1=re.compile(r"location\.replace\(\'(.*)\'\)")

pattern2=re.compile(r"userdomain\":\"(.*)\"},")

redirent_url=pattern1.search(r.content.decode("GBK")).group(1)

r1=sess.get(redirent_url)

login_url="https://weibo.com/"+ pattern2.search(r1.content.decode("utf-8")).group(1)

r2=sess.get(login_url)

weibo_headers["referer"]=r2.url

login_url1="https://weibo.com/aj/mblog/add?ajwvr=6&__rnd="+str(int(time.time()*1000))

r3=sess.post(login_url1, headers=weibo_headers, data=weibo_data, timeout=2)

if(r3.status_code==requests.codes.ok):

print("send weibo successfully!!!")

else:

print("there is something wrong!!!")

if__name__ =='__main__':

wb=weibo("***","***")

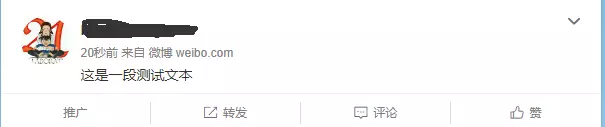

wb.login_login_send("这是一段测试文本")

然后你不用申请key也能写个自动发微博的程序了,当然能申请key最好啦。

监测新闻网站,自动发微博

这一点实现很简单,定义几个关键词,每隔60s爬取一次新闻网站,如果新出的新闻标题中包含关键词,就把新闻的标题和链接发到微博,程序的实现如下:

importre

importrequests

frombs4importBeautifulSoup

importtime

fromWeiboimportweibo

KEYWORDS = ["BTS","방탄소넌단","김석진","김남준","민윤기","정호석","박지민","김태형","전정국"]

classNewsSpider(object):

"""docstring for NewsSpider"""

def__init__(self):

super(NewsSpider, self).__init__()

self.url=["http://www.instiz.net/pt","http://www.instiz.net/clip"]

self.newslist=[]

self.wb=weibo("***","***")

print("complete initializing")

defcheckkeywords(self, title):

isinclude=False

forkeywordsinKEYWORDS:

ifkeywordsintitle:

isinclude=True

returnisinclude

defupdatenewslist(self, url):

withrequests.Session()assess:

r=sess.get(url, timeout=2)

soup=BeautifulSoup(r.content,"lxml")

listsubject=soup.find_all(id="subject")

forsubjectinlistsubject:

pattern = re.compile(r'\d{6,7}')

href=subject.a.get('href')

id=pattern.search(href)

ifid.group():

title=subject.a.text

ifself.checkkeywords(title)andid.group()notinself.newslist:

self.newslist.append(id.group())

self.updateWeibo(url+"/"+id.group(), title)

defrunforever(self):

i=0

failnum=0

while(1):

try:

self.updatenewslist(self.url[i])# 两个板块,交替执行

failnum=0

exceptExceptionase:

# 如果由于某些问题连续失败,比如ip被ban,记录失败次数,连续60次失败通知

failnum=failnum+1

iffailnum>=60:

print("There is something wrong with your code!!!")

failnum=0

finally:

i=(i+1)%2

time.sleep(30)

defupdateWeibo(self, newsurl, newstitle):

text="你的偶像们又有新消息了:"+newstitle+"。详情查看链接->"+newsurl

print(text)

self.wb.login_and_send_message(text)

if__name__ =='__main__':

ns=NewsSpider()

ns.runforever()

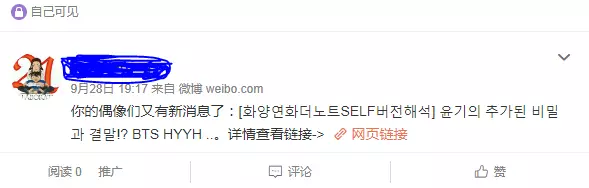

具体效果如图:

后记

当我帮程序交到妹子手上 ,就没有然后了。果然是badiaowuqing啊~

这波教程不点个赞,不关注一下说不过去吧?

对爬虫技术感兴趣的同学可以加群705673780,一起探讨,交流学习~