笔者最近面试到了网易新闻推荐部门,考了一点推荐系统的知识,算是被虐惨了。于是乎自己怒补了一些知识。记录一点关于推荐系统的知识和实现。

音乐推荐系统,这里的简单指的是数据量级才2万条,之后会详细解释。很多人学习python,不知道从何学起。

很多人学习python,掌握了基本语法过后,不知道在哪里寻找案例上手。

很多已经做案例的人,却不知道如何去学习更加高深的知识。

那么针对这三类人,我给大家提供一个好的学习平台,免费领取视频教程,电子书籍,以及课程的源代码!??¤

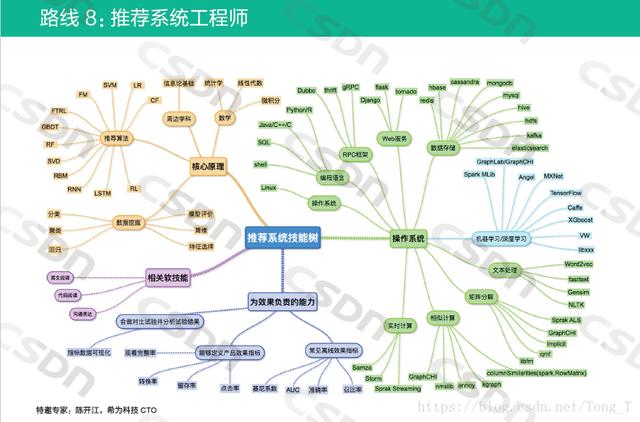

QQ群:9615621691. 推荐系统工程师人才成长RoadMap

2. 1. 数据的获取

任何的机器学习算法解决问题,首先就是要考虑的是数据,数据从何而来?

对于网易云音乐这样的企业而言,用户的收藏和播放数据是可以直接获得的,我们找一个取巧的方式,包含用户音乐兴趣信息,同时又可以获取的数据是什么?对的,是热门歌单信息,以及歌单内歌曲的详细信息。

3. 数据爬虫脚本

代码说明:

1. 网易云音乐网络爬虫由于加了数据包传动态参数的反爬措施。拿到歌单数据包的难度很大。一大神破解了传参动态密码,代码中AES算法。

2. 但是不知道为什么这个python2.7版下脚本只能爬取每个歌单里面的10首歌,由于这个原因,导致我们的推荐系统原始数据量级骤然降低。笔者试了很久,也没有办法。望大家给点建议。不管怎样,数据量小,那咱们就简单实现就好。

3. 一共1921个歌单(json文件),每个歌单里面包含10首歌,所以咱们后面建模的数据量实际只有2W左右的实例。# -*- coding:utf-8 -*- """ 爬虫爬取网易云音乐歌单的数据包保存成json文件 python2.7环境 """ import requests import json import os import base64 import binascii import urllib import urllib2 from Crypto.Cipher import AES from bs4 import BeautifulSoup class NetEaseAPI: def __init__(self): self.header = { 'Host': 'music.163.com', 'Origin': 'https://music.163.com', 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:56.0) Gecko/20100101 Firefox/56.0', 'Accept': 'application/json, text/javascript', 'Accept-Language': 'zh-CN,zh;q=0.9', 'Connection': 'keep-alive', 'Content-Type': 'application/x-www-form-urlencoded', } self.cookies = {'appver': '1.5.2'} self.playlist_class_dict = {} self.session = requests.Session() def _http_request(self, method, action, query=None, urlencoded=None, callback=None, timeout=None): connection = json.loads(self._raw_http_request(method, action, query, urlencoded, callback, timeout)) return connection def _raw_http_request(self, method, action, query=None, urlencoded=None, callback=None, timeout=None): if method == 'GET': request = urllib2.Request(action, self.header) response = urllib2.urlopen(request) connection = response.read() elif method == 'POST': data = urllib.urlencode(query) request = urllib2.Request(action, data, self.header) response = urllib2.urlopen(request) connection = response.read() return connection @staticmethod def _aes_encrypt(text, secKey): pad = 16 - len(text) % 16 text = text + chr(pad) * pad encryptor = AES.new(secKey, 2, '0102030405060708') ciphertext = encryptor.encrypt(text) ciphertext = base64.b64encode(ciphertext).decode('utf-8') return ciphertext @staticmethod def _rsa_encrypt(text, pubKey, modulus): text = text[::-1] rs = pow(int(binascii.hexlify(text), 16), int(pubKey, 16), int(modulus, 16)) return format(rs, 'x').zfill(256) @staticmethod def _create_secret_key(size): return (''.join(map(lambda xx: (hex(ord(xx))[2:]), os.urandom(size))))[0:16] def get_playlist_id(self, action): request = urllib2.Request(action, headers=self.header) response = urllib2.urlopen(request) html = response.read().decode('utf-8') response.close() soup = BeautifulSoup(html, 'lxml') list_url = soup.select('ul#m-pl-container li div a.msk') for k, v in enumerate(list_url): list_url[k] = v['href'][13:] return list_url def get_playlist_detail(self, id): text = { 'id': id, 'limit': '100', 'total': 'true' } text = json.dumps(text) nonce = '0CoJUm6Qyw8W8jud' pubKey = '010001' modulus = ('00e0b509f6259df8642dbc35662901477df22677ec152b5ff68ace615bb7' 'b725152b3ab17a876aea8a5aa76d2e417629ec4ee341f56135fccf695280' '104e0312ecbda92557c93870114af6c9d05c4f7f0c3685b7a46bee255932' '575cce10b424d813cfe4875d3e82047b97ddef52741d546b8e289dc6935b' '3ece0462db0a22b8e7') secKey = self._create_secret_key(16) encText = self._aes_encrypt(self._aes_encrypt(text, nonce), secKey) encSecKey = self._rsa_encrypt(secKey, pubKey, modulus) data = { 'params': encText, 'encSecKey': encSecKey } action = 'http://music.163.com/weapi/v3/playlist/detail' playlist_detail = self._http_request('POST', action, data) return playlist_detail if __name__ == '__main__': nn = NetEaseAPI() index = 1 for flag in range(1, 38): if flag > 1: page = (flag - 1) * 35 url = 'http://music.163.com/discover/playlist/?order=hot&cat=%E5%85%A8%E9%83%A8&limit=35&offset=' + str( page) else: url = 'http://music.163.com/discover/playlist' playlist_id = nn.get_playlist_id(url) for item_id in playlist_id: playlist_detail = nn.get_playlist_detail(item_id) with open('{0}.json'.format(index), 'w') as file_obj: json.dump(playlist_detail, file_obj, ensure_ascii=False) index += 1 print("写入json文件:", item_id)4. 特征工程和数据预处理,提取我这次做推荐系统有用的特征信息。

在原始的1291个json文件里面包含非常多的信息(风格,歌手,歌曲播放次数,歌曲时长,歌曲发行时间),其实大家思考后一定会想到如何使用它们进一步完善推荐系统。我这里依旧使用最基础的音乐信息,我们认为同一个歌单中的歌曲,有比较高的相似性,

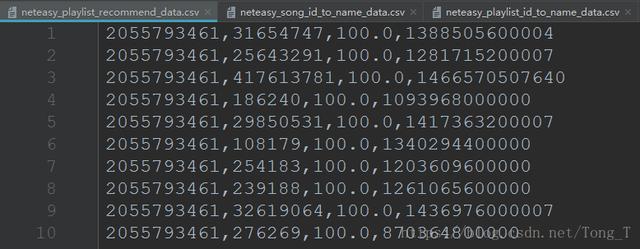

其中 歌单数据=>推荐系统格式数据,主流的python推荐系统框架,支持的最基本数据格式为movielens dataset,其评分数据格式为 user item rating timestamp,为了简单,我们也把数据处理成这个格式。

# -*- coding:utf-8-*- """ 对网易云所有歌单爬虫的json文件进行数据预处理成csv文件 python3.6环境 """ from __future__ import (absolute_import, division, print_function, unicode_literals) import json def parse_playlist_item(): """ :return: 解析成userid itemid rating timestamp行格式 """ file = open("neteasy_playlist_recommend_data.csv", 'a', encoding='utf8') for i in range(1, 1292): with open("neteasy_playlist_data/{0}.json".format(i), 'r', encoding='UTF-8') as load_f: load_dict = json.load(load_f) try: for item in load_dict['playlist']['tracks']: # playlist id # song id # score # datetime line_result = [load_dict['playlist']['id'], item['id'], item['pop'], item['publishTime']] for k, v in enumerate(line_result): if k == len(line_result) - 1: file.write(str(v)) else: file.write(str(v) + ',') file.write('\n') except Exception: print(i) continue file.close() def parse_playlist_id_to_name(): file = open("neteasy_playlist_id_to_name_data.csv", 'a', encoding='utf8') for i in range(1, 1292): with open("neteasy_playlist_data/{0}.json".format(i), 'r', encoding='UTF-8') as load_f: load_dict = json.load(load_f) try: line_result = [load_dict['playlist']['id'], load_dict['playlist']['name']] for k, v in enumerate(line_result): if k == len(line_result) - 1: file.write(str(v)) else: file.write(str(v) + ',') file.write('\n') except Exception: print(i) continue file.close() def parse_song_id_to_name(): file = open("neteasy_song_id_to_name_data.csv", 'a', encoding='utf8') for i in range(1, 1292): with open("neteasy_playlist_data/{0}.json".format(i), 'r', encoding='UTF-8') as load_f: load_dict = json.load(load_f) try: for item in load_dict['playlist']['tracks']: # playlist id # song id # score # datetime line_result = [item['id'], item['name'] + '-' + item['ar'][0]['name']] for k, v in enumerate(line_result): if k == len(line_result) - 1: file.write(str(v)) else: file.write(str(v) + ',') file.write('\n') except Exception: print(i) continue file.close() # parse_playlist_item() # parse_playlist_id_to_name() # parse_song_id_to_name()5. 数据说明

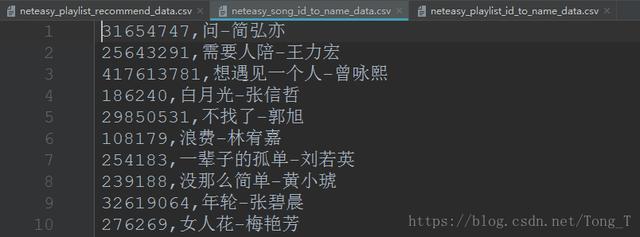

我们需要保存 歌单id=>歌单名 和 歌曲id=>歌曲名 的信息后期备用。歌曲id=>歌曲名:

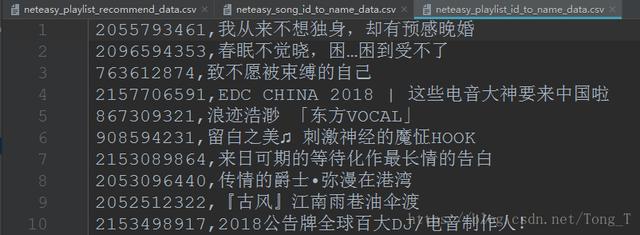

歌单id=>歌单名:

6. 推荐系统常见的工程化做法

project = offline modelling + online prediction

1)offline

python脚本语言

2)online

效率至上 C++/Java

原则:能离线预先算好的,都离线算好,最优的形式:线上是一个K-V字典1.针对用户推荐 网易云音乐(每日30首歌/7首歌)

2.针对歌曲 在你听某首歌的时候,找“相似歌曲”7. Surprise推荐库简单介绍

在推荐系统的建模过程中,我们将用到python库 Surprise(Simple Python RecommendatIon System Engine),是scikit系列中的一个(很多同学用过scikit-learn和scikit-image等库)。

具体的配合这篇博文(Python推荐系统库——Surprise)深入学习Surprise。

8. 网易云音乐歌单推荐

利用surprise推荐库中KNN协同过滤算法进行已有数据的建模,并且推荐相似的歌单预测

# -*- coding:utf-8-*- """ 利用surprise推荐库 KNN协同过滤算法推荐网易云歌单 python2.7环境 """ from __future__ import (absolute_import, division, print_function, unicode_literals) import os import csv from surprise import KNNBaseline, Reader, KNNBasic, KNNWithMeans,evaluate from surprise import Dataset def recommend_model(): file_path = os.path.expanduser('neteasy_playlist_recommend_data.csv') # 指定文件格式 reader = Reader(line_format='user item rating timestamp', sep=',') # 从文件读取数据 music_data = Dataset.load_from_file(file_path, reader=reader) # 计算歌曲和歌曲之间的相似度 train_set = music_data.build_full_trainset() print('开始使用协同过滤算法训练推荐模型...') algo = KNNBasic() algo.fit(train_set) return algo def playlist_data_preprocessing(): csv_reader = csv.reader(open('neteasy_playlist_id_to_name_data.csv')) id_name_dic = {} name_id_dic = {} for row in csv_reader: id_name_dic[row[0]] = row[1] name_id_dic[row[1]] = row[0] return id_name_dic, name_id_dic def song_data_preprocessing(): csv_reader = csv.reader(open('neteasy_song_id_to_name_data.csv')) id_name_dic = {} name_id_dic = {} for row in csv_reader: id_name_dic[row[0]] = row[1] name_id_dic[row[1]] = row[0] return id_name_dic, name_id_dic def playlist_recommend_main(): print("加载歌单id到歌单名的字典映射...") print("加载歌单名到歌单id的字典映射...") id_name_dic, name_id_dic = playlist_data_preprocessing() print("字典映射成功...") print('构建数据集...') algo = recommend_model() print('模型训练结束...') current_playlist_id = id_name_dic.keys()[200] print('当前的歌单id:' + current_playlist_id) current_playlist_name = id_name_dic[current_playlist_id] print('当前的歌单名字:' + current_playlist_name) playlist_inner_id = algo.trainset.to_inner_uid(current_playlist_id) print('当前的歌单内部id:' + str(playlist_inner_id)) playlist_neighbors = algo.get_neighbors(playlist_inner_id, k=10) playlist_neighbors_id = (algo.trainset.to_raw_uid(inner_id) for inner_id in playlist_neighbors) # 把歌曲id转成歌曲名字 playlist_neighbors_name = (id_name_dic[playlist_id] for playlist_id in playlist_neighbors_id) print("和歌单<", current_playlist_name, '> 最接近的10个歌单为:\n') for playlist_name in playlist_neighbors_name: print(playlist_name, name_id_dic[playlist_name]) playlist_recommend_main() # "E:\ProgramingSoftware\PyCharm Community Edition 2016.2.3\Anaconda2\python2.exe" C:/Users/Administrator/Desktop/博客素材/recommend_system_learning/recommend_main.py # 加载歌单id到歌单名的字典映射... # 加载歌单名到歌单id的字典映射... # 字典映射成功... # 构建数据集... # 开始使用协同过滤算法训练推荐模型... # Computing the msd similarity matrix... # Done computing similarity matrix. # 模型训练结束... # 当前的歌单id:2056644233 # 当前的歌单名字:暖阳微醺◎来碗甜度100%的糖水吧 # 当前的歌单内部id:444 # 和歌单< 暖阳微醺◎来碗甜度100%的糖水吧 > 最接近的10个歌单为: # # 2018全年抖腿指南,老铁你怕了吗? 2050704516 # 2018欧美最新流行单曲推荐【持续更新】 2042762698 # 「女毒电子」●酒心巧克力般的甜蜜圈套 2023282769 # 『 2018优质新歌电音推送 』 2000367772 # 那些为电音画龙点睛的惊艳女Vocals 2081768956 # 女嗓篇 |不可以这么俏皮清新 我会喜欢你的 2098623867 # 「柔美唱腔」时光不敌粉嫩少女心 2093450772 # 「节奏甜食」次点甜醹发酵的牛奶草莓 2069080336 # 03.23 ✘ 欧美热浪新歌 ‖ 周更向 2151684623 # 开门呀 小可爱送温暖 2151816466 # # Process finished with exit code 0协同过滤模型的评估验证方法:

file_path = os.path.expanduser('neteasy_playlist_recommend_data.csv') # 指定文件格式 reader = Reader(line_format='user item rating timestamp', sep=',') # 从文件读取数据 music_data = Dataset.load_from_file(file_path, reader=reader) # 分成5折 music_data.split(n_folds=5) algo = KNNBasic() perf = evaluate(algo, music_data, measures=['RMSE', 'MAE']) print(perf) """ Evaluating RMSE, MAE of algorithm KNNBasic. ------------ Fold 1 Computing the msd similarity matrix... Done computing similarity matrix. RMSE: 85.4426 MAE: 82.4766 ------------ Fold 2 Computing the msd similarity matrix... Done computing similarity matrix. RMSE: 85.2970 MAE: 82.0756 ------------ Fold 3 Computing the msd similarity matrix... Done computing similarity matrix. RMSE: 85.2267 MAE: 82.0697 ------------ Fold 4 Computing the msd similarity matrix... Done computing similarity matrix. RMSE: 85.3390 MAE: 82.1538 ------------ Fold 5 Computing the msd similarity matrix... Done computing similarity matrix. RMSE: 86.0862 MAE: 83.2907 ------------ ------------ Mean RMSE: 85.4783 Mean MAE : 82.4133 ------------ ------------ defaultdict(<type 'list'>, {u'mae': [82.476559473072456, 82.075552111584656, 82.069740410693527, 82.153816350251844, 83.29069767441861], u'rmse': [85.442585928330303, 85.29704915378538, 85.22667089592963, 85.339041675515148, 86.086152088447705]}) """

分类

利用Python简单实现网易云用户算法推荐系统

阅读(2451) 评论(0)